We build State of the Art AI Systems

From good and bad ideas to world leadership.

In 1989 a recent Oxford University graduate working at the CERN Laboratory in Switzerland invented what is now known as the World Wide Web, the ‘Web’. In the following year he, Tim Berners-Lee, wrote the first Web-based ‘client and server’ software making 1990 the beginning of a technology and communications revolution.

In the years that followed, Tim Berners-Lee and his colleagues sought to refine various specifications of the Web. In 1994, after leaving CERN, he founded the World Wide Web Consortium (W3C), an international consortium “made up of member organizations which maintain full-time staff working together on the development of standards for the Web” to foster compatibility and agreement among industry members in the adoption of new standards defined by the W3C.

In 1999 Berners-Lee and his colleagues at the Massachusetts Institute of Technology (MIT) introduced the concept of a ‘semantic web’ that would enable the World Wide Web to be ‘intelligent’. To encourage development of the concept they announced that it would be royalty-free. At that time the proposed architecture and a high-level proposal of its technology components was incomplete and rudimentary, but it showed promise.

“I have a dream for the Web [in which computers] become capable of analysing all the data on the Web, the content, links, and transactions between people and computers. A Semantic Web, which should make this possible, has yet to emerge, but when it does, the day-to-day mechanisms of trade, bureaucracy and our daily lives will be handled by machines talking to machines. The intelligent agents’ people have touted for ages will finally materialise.”

Professor Sir Tim Berners-Lee, 1999

Five years later, Berners-Lee expressed disappointment at the lack of progress with his “simple idea” of an intelligent Web.

“The Semantic Web is a web of actionable information—information derived from data through a semantic theory for interpreting the symbols. The semantic theory provides an account of “meaning” in which the logical connection of terms establishes interoperability between systems. This was not a new vision. Tim Berners-Lee articulated it at the very first World Wide Web Conference in 1994. This simple idea, however, remains largely unrealized.”

Berners-Lee, Shadbolt, Hall, Scientific American 2006

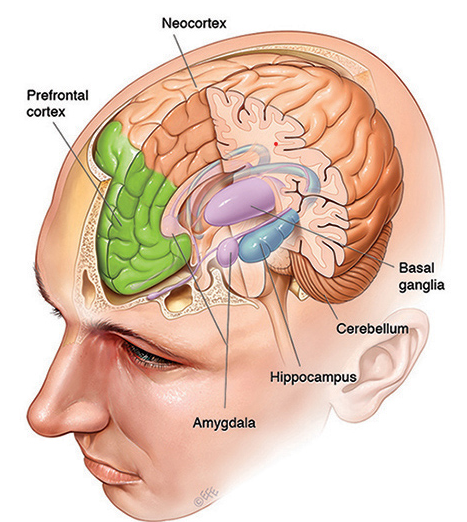

What Berners-Lee didn’t realise is that the proposed model for his intelligent computers dream was not simple. Building computer technology that mimicked a very complex computer such as a human brain required a very complex mathematical model on which to model the computing architecture.

Early attempts at making the Berners-Lee dream a reality encountered myriad challenges and, outside of academia, were largely abandoned.

Core to the challenges was the realisation that for a computer to have ‘intelligence’, it must also have ‘knowledge that it could understand by itself’, without human programming. How to represent computer understandable knowledge was the core challenge, and the subject of much debate within the very few institutions doing that were engaged in the leading research.

Humans accumulate knowledge by accumulating facts and understanding the relationships between those facts, such relationships sometimes involving considerable complexity.

Computing systems at that time didn’t have data architectures that supported storing complex relationships between facts, a necessity in any sophisticated knowledge model.

With the exception of IBM, the large computer system manufacturers abandoned research into the Berners-Lee model for computer intelligence. Some researchers pursued alternative approaches that were simpler to deploy, albeit mathematically impure (potentially creating data integrity issues).

Semantic Software Asia Pacific Limited’s (SSAP) research & development activities stem from the Company’s original Australian patent application, filed on 18 July 2001, and the award of its first patent in the United States, US patent 7464099, on December 8, 2008. A broad Australian patent was subsequently awarded in Australia on 12 March 2009, AU patent 2002318987, titled ‘Content Transfer’.

The original patent application was very broad in covering the use of metadata mapping to automatically transfer data from a database to a file, and vice versa, using a tagging mechanism such as ‘Extensible Mark-up Language’ (XML).

The data transfer took place via an intermediate in-memory operation that created a transient virtual store, a substantial software and hardware technology breakthrough.

This novel approach would automate what was then a largely manual and inefficient process that was

1. A roadblock to transferring data between non-identical systems especially when they were connected via the Web (syntactic interoperability)

2. Largely manual because the computers had no knowledge of the meaning of the data being transferred (semantic interoperability).

We now know that around the time that TBL et al were contemplating the theory that the Web could be made intelligent, the 2001 SSAP patent application described some of the core concepts required to facilitate its intelligence.

Later, in 2006, when TBL lamented the lack of progress with his “simple idea”, SSAP’s first patent application was well progressed in Australia and the United States and a second US patent application was filed on 26 November 2008 and was granted on 24 December 2013 as US patent 8615483.

It is worth noting that US patents are recognised as the ‘gold standard’ for acknowledging the invention of innovative technology from the creation of new knowledge.

Achieving a US patent is an onerous task that inventors must endure as they travel the US patenting examination and jurisdiction process. Our 2001 patent application was allowed in full in Australia without any objections, hence it is described as a ‘broad’ patent. To achieve the same in the United States, our claims were divided into 18 separate patent applications that had to be pursued one-by-one, a laborious and expensive process of responding to well over one hundred objections.

During the period 2009 to 2014 IBM Corporation and SSAP engaged in discussions about the SSAP patent ‘portfolio’. Prior to one of the exchanges in 2012, SSAP commissioned a ‘White Paper’ from a British independent data analyst group, Bloor Research. Until then, SSAP couldn’t establish the likely value of the original Australian and US patents and the pending second series of US patent applications, because there was negligible evidence of similar technologies or their potential applications.

Upon receipt of the ‘White Paper’ a copy was forwarded to IBM Corporation and, surprisingly, received no feedback. In hindsight, the standout revelation from the ‘White Paper’ was found in the concluding paragraph as follows.

“We are advised that Semantic Software is in discussions with various data integration vendors and other potential partners that have expressed an interest in licensing its technology and assisting the company to bring its technology into the mainstream. We can only recommend such an action; the Semantic Software technology will not only simplify existing environments for new and existing users but it will also mean that licensees are well-positioned as we move into the era of the semantic web, which will be dominated by Resource Description Framework (RDF) written in XML. Needless to say, we conclude that any vendor that does not take up such a license, or think of some other way of achieving the same results (which might be difficult given Semantic Software’s patents), is going to be at a disadvantage compared to those that do.”

Philip Howard – Bloor Research, June 2012.

The forecast dominance of RDF/XML in the semantic web was indicative of the value of the company’s patents, but SSAP correctly concluded that the true potential value would only be realised if others licenced the core technology of SSAP’s patents and/or if the company undertook the necessary research and development to create its own intelligent semantic computing system. Again, the challenge seemed more difficult because research activity in the semantic web outside of academia and W3C was not apparent.

The company chose to commit to a comprehensive R&D program with an investment that grew to over A$25M during the period of FY2013 to FY2019.

Various advisers argued that the Company should transfer the R&D program to the United States of America. However, the executive decided that the R&D program would remain in Sydney where we would be able to find and engage the requisite high-quality Australian scientists, technologists, engineers and mathematicians (STEM).

In May 2012 SSAP established an R&D facility in North Sydney and commenced a new program of R&D activities focused on understanding the ramifications of SSAP’s original patent ‘Specification’ for developing technologies that might enable the Berners-Lee dream.

Those R&D activities initially centred on the concepts described in the ‘Specification’ contained in SSAP’s 2001 patent application and the potential use of those concepts in facilitation of artificial intelligence technologies. In parallel with that, SSAP researched and dispensed with much of the Berners-Lee proposed technology components in his concept architecture that were hindering the adoption of his model.

From the outset SSAP required that any of the developed technology solutions from the company’s R&D activities were based on a sound mathematical model called ‘Topology’ which SSAP hypothesised was the model most closely aligned with human brain function. We needed to ensure that any technologies we invented would yield a result consistent with topological outcomes.

If that could not be achieved by technologies implied by our 2001 patent specification, we would have to conduct R&D activities and develop technologies that did yield a result consistent with topological outcomes, and we would seek to patent the knowledge and technology to protect our investment in the intellectual property derived from the company’s R&D activities.

Our R&D activities were required to undertake the following.

1. Validate the Bloor Research opinions

2. Understand the nature of a semantic web, the Berners-Lee dream

3. Evaluate the technologies proposed by Berners-Lee et al, their proposed utility and viability

4. Propose the underlying mathematical model for computational purity

5. Evaluate the adaptability of existing technologies

6. Evaluate proposals and/or models of new and emerging technologies

7. Identify remaining technology gaps and expected new knowledge sought to resolve, or fail trying

8. Establish the working hypothesis to generate the new knowledge through progressive experimentation with validation through technical proof of concept

9. Secure the intellectual property gained from the R&D activities via US patents

In June 2012 the initial research lead to the filing of another eight (8) US ‘continuity’ patent applications consistent with the 2001 patent Specification, all of which have led to US patents being granted between June 2012 and March 2018.

In August 2012 the Company filed a further eight (8) US ‘continuity’ patent applications consistent with the 2001 patent Specification, with all but one now issued, and the final one in the latter stages of the examination process. In summary, 18 patent applications have been filed in the United States to protect the technologies invented consistent with SSAP’s 2001 patent Specification, and they have led to 17 US patents being issued so far and another likely to be issued in 2020.

For those requirements that were additional to those that could be met consistent with the 2001 patent Specification, further research activity focused on other ways to fill the gaps in the Berners-Lee model and usability requirements not proposed by Berners-Lee et al. This has led to eight (8) US patent applications which are considered by SSAP and associated other experts to constitute the fundamental technologies that will facilitate world leading artificial intelligence systems in the near future and beyond.

That we have been so far ahead of the rest of the world with our research is illustrated by our most recent US patent, one of the eight above, being awarded after just one objection that we were able to quickly address in full.

Describing the eventual application of SSAP’s inventions in an ‘intelligent semantic computing system’ facilitating artificial intelligence is possible because the specific application is tangible and delivered as a commercial product/service. However, trying to describe the R&D activities undertaken and the new knowledge and technology (particularly the software engineering) is very difficult for most people as it is intangible to them, appearing foreign if not alien, with new language invoked and the use of existing words with entirely different meanings, e.g. artefacts, concepts.

To look at the company’s patents to get an understanding of the company’s R&D activities is not easy.

As an example, the company’s most recent patent application (Filed 21 March 2016, Published 21 December 2018) titled ‘Semantic Knowledge Base’ reflects some of the new knowledge and technology derived from the R&D activities of FY2015 and FY2016.

The patent’s abstract is as follows:

“A system for categorising and referencing a document using an electronic processing device, wherein: the electronic processing device reviews the content of the document to identify structures within the document; wherein the identified structures are referenced against a library of structures stored in a database; wherein the document is categorised according to the conformance of the identified structures with those of the stored library of structures; and wherein the categorised structure is added to the stored library”.

Laypersons reading this abstract may get an idea of what it may mean or be applicable to, but if they attempt to read the content of the patent, they will most likely be baffled because,

The technology is complex, invoking the complex mathematical model of topology

Patents are drafted by patent attorneys to deliberately obfuscate the underlying new knowledge gained from the company’s R&D activities to extend and protect its possible use and increase its commercial value.

Hence our objective now is to demonstrate its ability to help customers build state-of-the-art Artificial Intelligence systems quickly and become largely self sufficient in doing so within twelve months of starting on the first project. Get started.